11 lessons learned while trying to become a data-driven company

For me, it was a significant change from leading a large B2B product to co-founding a small B2C startup.

From the very beginning, it was clear that data will play a significant role in our decision-making process. We moved fast, made a lot of experimental changes, and didn't have those large customer representatives to talk to when making our decisions. I had to change my habits and replace humans with numbers.

We've embedded Mixpanel, Google Analytics, AppsFlyer, Facebook SDKs, Crashlytics, and a bunch of other tools, we created our own dashboard as well as a unique and addictive mobile dashboard, and deployed a set of real-time logs. It was fun!

We've embedded Mixpanel, Google Analytics, AppsFlyer, Facebook SDKs, Crashlytics, and a bunch of other tools, we created our own dashboard as well as a unique and addictive mobile dashboard, and deployed a set of real-time logs. It was fun!

Over the first 2 years of our startup, we've learned the hard way that being a data-driven company is harder than it seems.

I would like to share with you some of the lessons learned while working with data. I believe our insights and experience can help young entrepreneurs, product managers, and UX designers trying to get the most out of their ideas and products.

Let’s start with some basic ones:

1. Base your decisions on facts, not opinions

During interviews, customers will probably tell you what they believe you want to hear.

Don't count on it.

Don't count on it.

When we just started developing the product, our working assumption was that the services ordered through the marketplace will be performed either at the customers’ homes or at the nearest salons and spas. We interviewed potential customers and managed to validate the assumption that both options were equally required.

So with the first few versions of Missbeez, customers could order our services to their homes or travel by themselves to the nearest salon/spa.

We learned the hard way that people act differently than how they think or say they will, especially when paid services are involved.

Can you guess how many customers selected the second option (going to the salon)?

After a month - 0% of our early customer has selected the salon option.

After 2 months it was an astonishing... 0%.

After 3 months, we had a devastating 1 salon order…

We gloriously failed to follow some of the most basic rules when developing a new product:

- Never trust what your potential customers are saying.

- Don’t base your decisions on opinions. Use data instead.

- Always start with a simple MVP (in this case, a dummy button that does nothing and only measures how many people tried to use it could have been enough).

2. Don’t blindly follow what the experts are saying

Remember: whatever works well for one product, may not work so well for another.

Along the road, we worked with a lot of experts and mentors in the field of mobile apps and mobile marketplaces who were extremely helpful in many cases.

We’ve learned that sometimes things that work perfectly for some apps, do not work so well with other apps because every product has its’ own unique characteristics. That's why you need to be a bit more skeptical about "best practices".

Being skeptical doesn't mean you're pessimistic, it means you're experienced.

Here's an interesting example:

With our product, customers have to pay for the services they order. This means they need to enter their payment details (credit card/PayPal) in order to secure the booking although the payment only takes place when the treatment is complete.

Measuring our conversion rates throughout the funnel, we identified a huge drop when the app asks for the payment details. This step was the last step before the order was created and the app started to search for relevant candidates.

Consulting with some experts about it - we were advised to move the payment details step to a later stage, right after the booking is made.

The hypothesis was based on some known cognitive biases such as 'choice-supportive bias', 'sunk-cost fallacy', and 'foot-in-the-door' technique) and was quite reasonable: when the booking becomes concrete and the engagement level goes up - it’s going to be much harder for the user to drop and hopefully easier to enter the payment details.

Makes sense, right?

Well, not so fast...

In other products, this theory might be true, but in our case (and believe me, there’s always: “in our case”) - it didn’t quite work as expected…

The conversion drop improved a little bit, but it moved to a later stage in the workflow and remained very big.

More importantly, there were unexpected side effects to this change, since the drop came after the booking was already made, causing a lot of frustration to the service providers who have spent energy bidding for those orders and waited for the booking system to confirm their time slots.

Lesson learned:

Tradeoffs exist in every decision we make, but sometimes when we are certain about something - we fail to predict those tradeoffs and the only way to truly understand them is to make the change, collect the relevant data, and analyze the outcomes.

- Always question your working assumptions.

- Beware of your own biases [link] and don’t fall in love with your ideas.

- Measure every change you make in the product in order and analyze the results.

- Develop feature switches for quick rollbacks in cases where changes do not provide the results they were supposed.

3. There’s always more data to collect

When the time comes to make a decision - you'll wish you had more data available to support you.

Collect as much data as possible because you cannot predict what will be needed on later stages.

Don't worry about performance or efficiency; you’ll have plenty of time (and developers!) to worry about that when you become a unicorn...

Build a strong data infrastructure to track cohorts, user origins, segmentation, marketing channels, user milestones, user history, and more.

Every feature you develop needs to be defined with a set of measurements.

Turn Boolean fields into dates so you can store both the evidence of each event along with the time it happened. You can thank me later for that one... ;-)

Every feature you develop needs to be defined with a set of measurements.

Turn Boolean fields into dates so you can store both the evidence of each event along with the time it happened. You can thank me later for that one... ;-)

84 cognitive biases you should exploit to design better products

Learn how to take advantage of cognitive biases to increase your product's conversion rates, engagement level, and retention. A must-read article for every entrepreneur and product manager dealing with UX, UI and B2C products.

|  |

4. Logs shouldn’t be a developers thing only

When you release a new feature, you are curious to see what happens with it, how users react to it, and how much traction it creates.

While we use plenty of tools to keep track of new features (i.e. monitoring tools, logs, analytics), we also added a custom layer that proved to be extremely useful in countless occasions: a smart feed of activities that is accessible to everyone in the company and can be pushed via email.

To keep track of everything that's going on in our system, we created a trigger-based log, composed out of small capsules of actions, written in human-friendly (non-technical) language and includes quick links to our dashboards.

We use it to monitor unusual workflows in the system or to get push alerts when new features or promotions are used for the first few times. Every person in the company can “subscribe” to different push events and narrow down the feed.

This tool is great: it creates transparency, it helps our operations team find events that happened in the past and may impact current customer issues. It eliminates the dependency in the technical logs for analyzing unusual user behavior (not bugs) and keeps a search-friendly history of everything that happened in the field, step by step, rather than just having a snapshot of the current state.

While this is not a replacement for any of our other monitoring tools - this human-friendly log was one of the best things we’ve invented and helped us a lot in the early days of our product (and still does).

5. Market changes can be much stronger than any product or feature change

It took us more than a year to understand that the lean startup methodology of build-measure-learn is too simplistic and is not sufficient for our needs.

Throwing experiments in production is like throwing a paper airplane from a window.

You create the best model you give it a throw.

You analyze the course of the flight, and modify your airplane based on your analysis: you lift up the front, add a small curve to the wings, do whatever it takes to make it better.

You can repeat this experiment over and over again, but soon enough you’ll realize there’s no consistent correlation between your modifications and the performance of your airplane.

Soon enough you realize it's not you, it's the wind...

For us, the wind was the market we operate in.

Changes in our product performance were caused by external forces such as weather changes (you won’t believe the impact of a sunny day in the middle of winter on certain beauty treatments), as well as our own activities (changes in our marketing campaigns or user acquisition that could change our conversion rates more dramatically than any UI optimization in the product), and more.

Since we constantly made changes and progressed in all fronts - we could never accurately compare one week to the previous one. There were simply too many differences between the two, other than the product changes we wanted to analyze.

Which pretty much leads me to the next lesson:

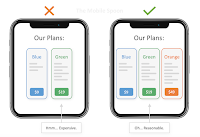

You can’t replace A/B tests with feature switches.

It’s great to be able to turn on/off features in production (it’s one of the keys for creating a flexible product that is easily managed), but if you wish to perform serious product experiments you need a way to neutralize market noises and be able to compare apples to apples: same cohorts, same segments, same time frames.

If you haven't implemented a decent A/B split mechanism yet - do it yesterday.

Do it.

Do it.

If you haven't implemented a decent A/B split mechanism yet - do it yesterday.

Do it.

Do it.

[Read more about it in here: Practicing Product Analytics in a Market Full of Uncertainties]

A/B tests are the most efficient method to analyze your product enhancements while neutralizing market noise. You want to try something new, and the only way to know how it performed is by neutralizing seasonal or other market events. A/B tests allow that.

Some A/B options will require additional front-end development, some will require server-side development. Both options will need a mechanism that assigns the users to each group (group A, group B and sometimes group C). Note that you may need to balance each group with users from different segments (old vs. new, paying customers vs. non-payers).

This means some extra development effort for every feature you wish to split test, even if you use a product that makes it easier to be done.

6. Have a single source of truth, and own it

When you work with app attribution tools, mobile analytics, marketing campaigns, user acquisition and more - you end up working with a lot of different systems. It's crucial to bring back the important stuff home and own a single source of truth.

The main benefit of owning all the data goes beyond convenience and BI - it allows you to base your business logic on data that was originally collected elsewhere. An example can be mobile app attribution: although this data is collected by the likes of AppsFlyer, it's important to store the user origin and have it available for future analytics and the product's business logic.

7. Early adopters behave differently than the majority of users

I recently wrote about the good, the bad, and the ugly truth about early adopters.

Early adopters are the first to give your product a chance. They provide feedback, they are vocal, they are active in social networks, they are thought leaders, they are tech savvies, they are superheroes!

Which means, that they are different than the majority of users: they are less sensitive to product limitations and bugs, they are less sensitive to price, they ask for power-user features and they are biased by their nature.

The result is that analyzing early KPI’s might show higher conversion rates and better retention rates than along the road because early adopters behave differently than the mass market.

Keep that in mind when you validate your business plan assumptions with early numbers that might not be 100% accurate.

[Read: Beware of early adopters]

[Read: Beware of early adopters]

8. Don’t overestimate the vocal minority

They are vocal, but they are not necessarily the majority.

Worry less about the vocal users, and more about the majority of users that act quietly.

When we realized we have a fulfillment problem with small and urgent orders, we decided to add a travel fee and raise the price bar a little bit.

Our hypothesis was that some customers will drop because of the fee, some will add another treatment to avoid the fee, and some will stick with their original orders (but the additional travel fee will increase the engagement level of the supply side).

Basic economic laws, right?

Immediately after adding the travel fee, we got a fair number of angry emails from angry customers, complaining about the travel fee.

Some of those emails were pretty furious…

Despite going through a few unpleasant weeks, we stuck with the plan, and the results were quite amazing: the change worked exactly as we hoped for: the number of small urgent orders went down, the average deal size went up and the fulfillment rate improved.

While the vocal minority was shouting at our customer success team, the silent majority acted differently and data showed that the changes we were hoping to achieve, did happen.

This is why shipping is so important to me

Establishing a culture of frequent shipping was one of the best things we did in our startup.

Here's why shipping is so important for the success of your product. |

9. Beware of long term consequences of your product changes

Some changes cannot be measured immediately because their impact takes time.

This one is relevant when working on improving long term KPIs like retention rates.

In our business, retention is measured on a monthly basis due to the typical frequency of use.

Changes we make in order to improve retention (such as adding loyalty programs), cannot be measured on a weekly basis and require a lot of patience (sometimes even a few months).

On the other hand, there is always a risk that some of the changes we make will not produce noticeable results, but will cause slow side effects that are difficult to follow.

10. Getting clear answers is never easy

Over the past few years, we’ve made countless product experiments that have failed to deliver clear insights.

Sometimes the differences between options A and B were too small (less than 5%), sometimes identical tests provided different results, and sometimes the results were not explicit enough.

Just recently we made a change in the product (through A/B test) that resulted in slightly better conversion rates but caused a side-effect and a lot of customer complaints. This misalignment encouraged us to continue and make more experiments until we found a compromised option that is not as good in terms of the conversion numbers, but provides much better user experience and drives higher satisfaction rates.

When working with data, patience and persistence are a must.

11. There’s always more than meets the eyes.

And no, I'm not finishing with this one just because I'm a Transformers fan.

It's real. Things are always more complicated than they seem to be.

Have you ever used an app and thought to yourself: "This UI sucks! I wonder why they didn't place the button where it should be?!"

It happens a lot, but in many cases, there's a rationale behind those design considerations, stories that someone from the outside just can't understand without knowing all the details.

As an example, we recently added a step to a relatively simple action and got rid of some useful default values on purpose, just to prevent some actions from being performed too "casually" or accidentally by our service providers.

We deliberately made the app less convenient in order to increase the users' attention.

While those changes were perceived to be counter-productive - they were completely data-driven and we knew exactly why we made them. Some service providers complained about these changes at first but based on the data we saw that the changes achieved their goals.

Follow me on twitter @gilbouhnick, or subscribe to my newsletter to get some occasional posts directly to your inbox.

Comments

Post a Comment